| Exam Name: | Databricks Certified Associate Developer for Apache Spark 3.5 – Python | ||

| Exam Code: | Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Dumps | ||

| Vendor: | Databricks | Certification: | Databricks Certification |

| Questions: | 136 Q&A's | Shared By: | august |

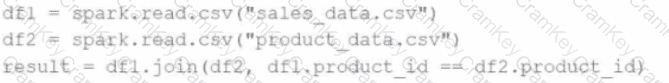

A data engineer writes the following code to join two DataFrames df1 and df2:

df1 = spark.read.csv("sales_data.csv") # ~10 GB

df2 = spark.read.csv("product_data.csv") # ~8 MB

result = df1.join(df2, df1.product_id == df2.product_id)

Which join strategy will Spark use?

29 of 55.

A Spark application is experiencing performance issues in client mode due to the driver being resource-constrained.

How should this issue be resolved?

25 of 55.

A Data Analyst is working on employees_df and needs to add a new column where a 10% tax is calculated on the salary.

Additionally, the DataFrame contains the column age, which is not needed.

Which code fragment adds the tax column and removes the age column?

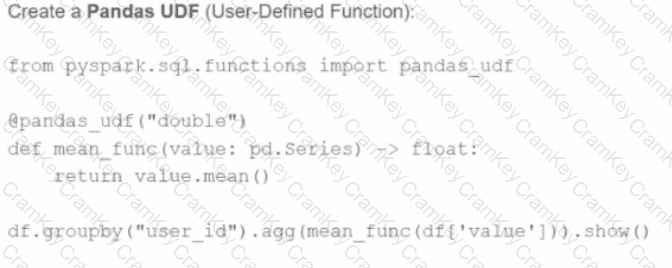

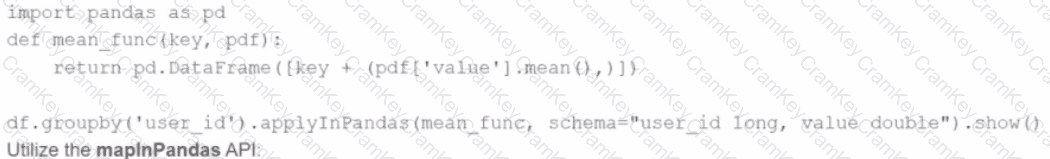

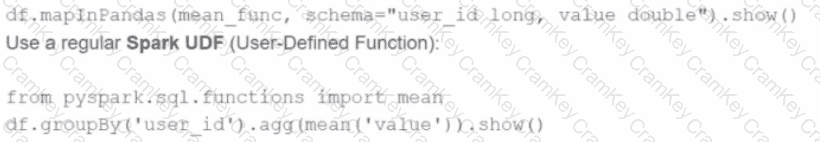

A developer is working with a pandas DataFrame containing user behavior data from a web application.

Which approach should be used for executing a groupBy operation in parallel across all workers in Apache Spark 3.5?

A)

Use the applylnPandas API

B)

C)

D)