| Exam Name: | Databricks Certified Associate Developer for Apache Spark 3.5 – Python | ||

| Exam Code: | Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Dumps | ||

| Vendor: | Databricks | Certification: | Databricks Certification |

| Questions: | 136 Q&A's | Shared By: | ivan |

A Spark application is experiencing performance issues in client mode because the driver is resource-constrained.

How should this issue be resolved?

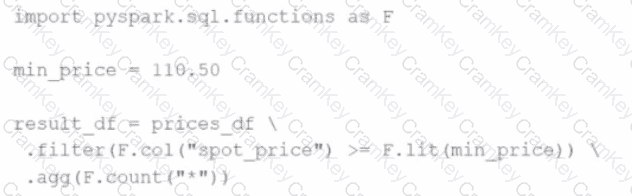

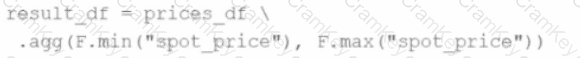

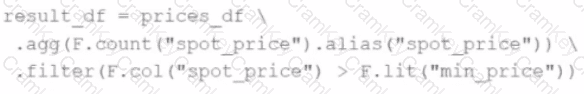

A developer wants to refactor some older Spark code to leverage built-in functions introduced in Spark 3.5.0. The existing code performs array manipulations manually. Which of the following code snippets utilizes new built-in functions in Spark 3.5.0 for array operations?

A)

B)

C)

D)

What is the difference between df.cache() and df.persist() in Spark DataFrame?

How can a Spark developer ensure optimal resource utilization when running Spark jobs in Local Mode for testing?

Options: