| Exam Name: | Databricks Certified Associate Developer for Apache Spark 3.5 – Python | ||

| Exam Code: | Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Dumps | ||

| Vendor: | Databricks | Certification: | Databricks Certification |

| Questions: | 136 Q&A's | Shared By: | remy |

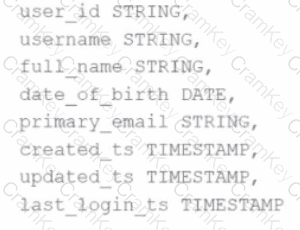

A data scientist has identified that some records in the user profile table contain null values in any of the fields, and such records should be removed from the dataset before processing. The schema includes fields like user_id, username, date_of_birth, created_ts, etc.

The schema of the user profile table looks like this:

Which block of Spark code can be used to achieve this requirement?

Options:

39 of 55.

A Spark developer is developing a Spark application to monitor task performance across a cluster.

One requirement is to track the maximum processing time for tasks on each worker node and consolidate this information on the driver for further analysis.

Which technique should the developer use?

An engineer wants to join two DataFrames df1 and df2 on the respective employee_id and emp_id columns:

df1: employee_id INT, name STRING

df2: emp_id INT, department STRING

The engineer uses:

result = df1.join(df2, df1.employee_id == df2.emp_id, how='inner')

What is the behaviour of the code snippet?

A Spark developer is building an app to monitor task performance. They need to track the maximum task processing time per worker node and consolidate it on the driver for analysis.

Which technique should be used?