| Exam Name: | Databricks Certified Associate Developer for Apache Spark 3.5 – Python | ||

| Exam Code: | Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 Dumps | ||

| Vendor: | Databricks | Certification: | Databricks Certification |

| Questions: | 136 Q&A's | Shared By: | cohen |

35 of 55.

A data engineer is building a Structured Streaming pipeline and wants it to recover from failures or intentional shutdowns by continuing where it left off.

How can this be achieved?

45 of 55.

Which feature of Spark Connect should be considered when designing an application that plans to enable remote interaction with a Spark cluster?

A DataFrame df has columns name, age, and salary. The developer needs to sort the DataFrame by age in ascending order and salary in descending order.

Which code snippet meets the requirement of the developer?

Given the code:

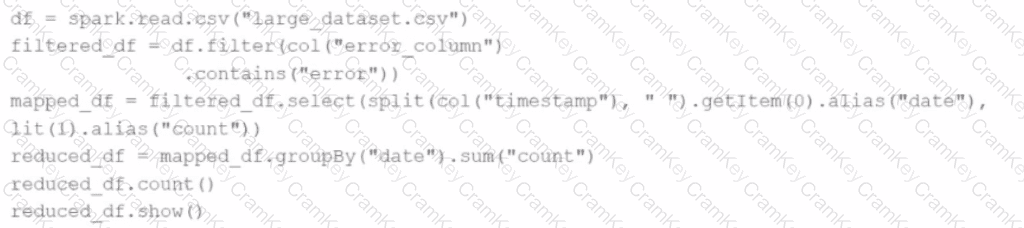

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp"), " ").getItem(0).alias("date"), lit(1).alias("count"))

reduced_df = mapped_df.groupBy("date").sum("count")

reduced_df.count()

reduced_df.show()

At which point will Spark actually begin processing the data?