| Exam Name: | SnowPro Advanced: Architect Certification Exam | ||

| Exam Code: | ARA-C01 Dumps | ||

| Vendor: | Snowflake | Certification: | SnowPro Advanced: Architect |

| Questions: | 182 Q&A's | Shared By: | andrew |

A Snowflake Architect created a new data share and would like to verify that only specific records in secure views are visible within the data share by the consumers.

What is the recommended way to validate data accessibility by the consumers?

What considerations apply when using database cloning for data lifecycle management in a development environment? (Select TWO).

An Architect has a VPN_ACCESS_LOGS table in the SECURITY_LOGS schema containing timestamps of the connection and disconnection, username of the user, and summary statistics.

What should the Architect do to enable the Snowflake search optimization service on this table?

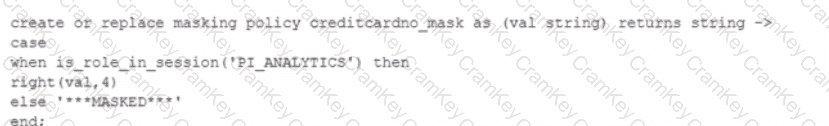

Consider the following scenario where a masking policy is applied on the CREDICARDND column of the CREDITCARDINFO table. The masking policy definition Is as follows:

Sample data for the CREDITCARDINFO table is as follows:

NAME EXPIRYDATE CREDITCARDNO

JOHN DOE 2022-07-23 4321 5678 9012 1234

if the Snowflake system rotes have not been granted any additional roles, what will be the result?