Implementing Analytics Solutions Using Microsoft Fabric

Last Update Mar 5, 2026

Total Questions : 166

To help you prepare for the DP-600 Microsoft exam, we are offering free DP-600 Microsoft exam questions. All you need to do is sign up, provide your details, and prepare with the free DP-600 practice questions. Once you have done that, you will have access to the entire pool of Implementing Analytics Solutions Using Microsoft Fabric DP-600 test questions which will help you better prepare for the exam. Additionally, you can also find a range of Implementing Analytics Solutions Using Microsoft Fabric resources online to help you better understand the topics covered on the exam, such as Implementing Analytics Solutions Using Microsoft Fabric DP-600 video tutorials, blogs, study guides, and more. Additionally, you can also practice with realistic Microsoft DP-600 exam simulations and get feedback on your progress. Finally, you can also share your progress with friends and family and get encouragement and support from them.

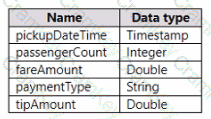

You have a Fabric tenant that contains a lakehouse named Lakehouse1. Lakehouse1 contains a table named Nyctaxi_raw. Nyctaxi_raw contains the following columns.

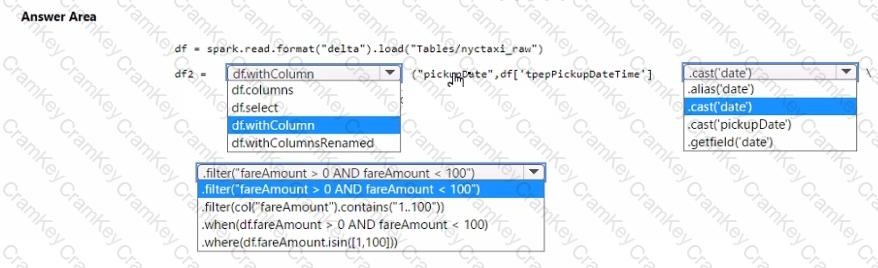

You create a Fabric notebook and attach it to lakehouse1.

You need to use PySpark code to transform the data. The solution must meet the following requirements:

• Add a column named pickupDate that will contain only the date portion of pickupDateTime.

• Filter the DataFrame to include only rows where fareAmount is a positive number that is less than 100.

How should you complete the code? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

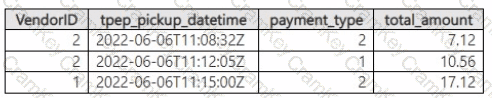

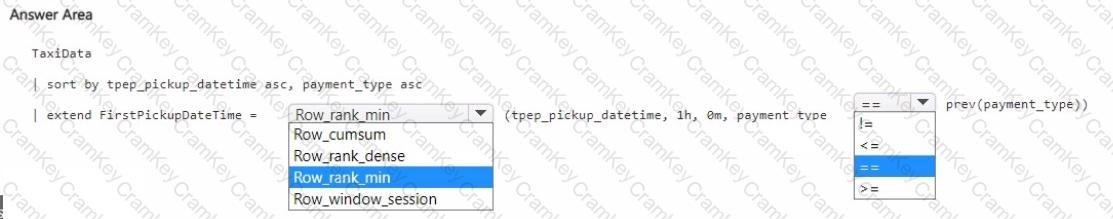

You have a Fabric eventhouse that contains a KQL database. The database contains a table named TaxiData that stores the following data.

You need to create a column named FirstPickupDateTime that will contain the first value of each hour from tpep_pickup_datetime partitioned by payment_type.

NOTE: Each correct selection is worth one point.

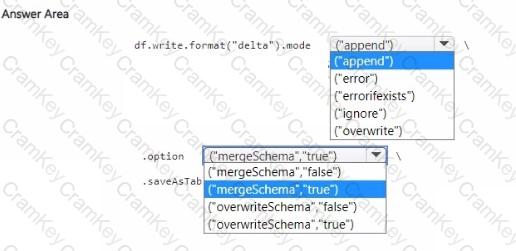

You have a Fabric tenant that contains lakehouse named Lakehousel. Lakehousel contains a Delta table with eight columns. You receive new data that contains the same eight columns and two additional columns.

You create a Spark DataFrame and assign the DataFrame to a variable named df. The DataFrame contains the new data. You need to add the new data to the Delta table to meet the following requirements:

• Keep all the existing rows.

• Ensure that all the new data is added to the table.

How should you complete the code? To answer, select the appropriate options in the answer area.

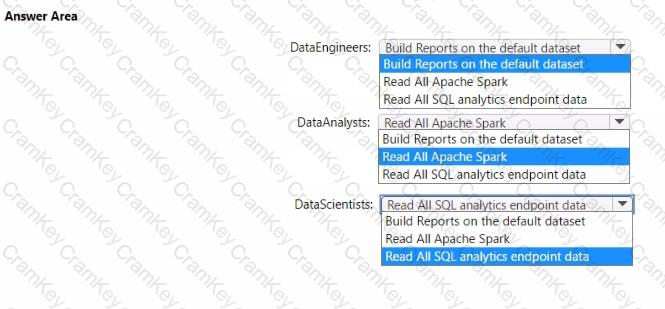

You to need assign permissions for the data store in the AnalyticsPOC workspace. The solution must meet the security requirements.

Which additional permissions should you assign when you share the data store? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.